“The TrustNet Incident”

“By 2035, CMPD didn’t patrol — it listened. Every shift began with a TrustNet briefing, overlaying the city in color-coded trust metrics. Officers engaged “trust opportunity zones,” not crime hotspots.”

The brick trembled in the man’s hands, speckled with blood and fear.

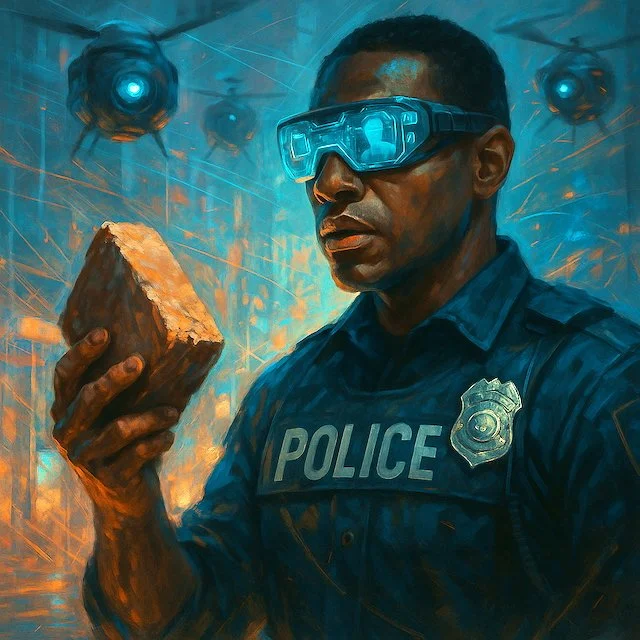

“Drop it,” Officer Jamal Carter repeated, voice calm but resolute. His AR glasses tracked the man’s vitals — heart rate at 138 BPM, adrenaline spiking. Behind Carter, three Charlotte-Mecklenburg PD drones hovered silently, lenses glowing blue — non-lethal standby.

The man — mid-thirties, shirt torn, lip bleeding — stared at the shattered store window. Inside, a child sobbed. Aisha. Carter knew her from a tech fair. She wanted to build river-cleaning robots.

“Don’t do this,” Carter said again, softer. Not as a cop. As a father. As a neighbor.

“It’s lying,” the man whispered. “Your damn TrustNet — it said I was a threat. But I’m just a dad.”

Carter’s AR display flashed: LEVEL 3 THREAT. Aggression Index: 82%. Drone Stun Protocol Armed. He didn’t move. If he twitched wrong, the drones would follow. TrustNet mirrored his body.

“I know what it says,” Carter replied. “But I see something else. I see a father.”

The man’s arms dropped. The brick clattered. One drone chirped, de-escalation registered. Carter stepped forward, override triggered.

“What’s your name?” he asked.

“Darnell.”

“My daughter, she’s all I have,” he said.

Carter nodded. “Then let’s make sure she still has you.”

The Breakdown

In 2025, Charlotte, NC wasn’t at war with crime — it was at war with perception. Viral clips painted CMPD as armored occupiers. Every traffic stop became a potential headline. Trust collapsed.

Body cam footage gathered dust. Officers felt unsupported. Citizens felt unseen. The 2023 Bojangles arrest was a turning point — lawful or not, the optics were damning.

When CMPD launched the CommUNITY Sync Initiative in 2026, critics scoffed. “Policing like Chick-fil-A” became the joke. But inside headquarters, change was brewing. Not PR — real change.

CMPD partnered with NeuraSpan, a frontier AI firm, to create TrustNet: an advanced platform that analyzed every interaction — tone, posture, facial expression, even the emotional climate of neighborhoods.

Officers were equipped with AR glasses that tracked stress and delivered nudges mid-call. Metrics shifted from arrests to empathy. And Jamal Carter? He was among the first to wear the tech — “voluntold,” at first.

He hated it. The feedback. The sentiment scores. The feeling of being watched.

But then Marcus died.

The Partner

Marcus Yates was Carter’s partner. Calm, patient, gifted with people. But after taking down a teen in a use-of-force incident outside a rec center, the optics buried him. Though cleared internally, public outrage didn’t fade. He was benched. Offered reassignment. He declined it. Took an early retirement instead.

Three months later, Marcus was found dead in his apartment. A broken, lost man. No note. No call. Just silence.

Carter believed Marcus didn’t die because he made a mistake — but because the system didn’t give him space to make one. If tech like TrustNet could stop that from happening again, maybe it was worth listening to.

So Carter leaned in.

A New Kind of Beat

By 2035, CMPD didn’t patrol — it listened. Every shift began with a TrustNet briefing, overlaying the city in color-coded trust metrics. Officers engaged “trust opportunity zones,” not crime hotspots.

That morning, Carter’s beat took him through Beatties Ford Road, once a protest zone, now a corridor of cautious optimism. He shot hoops with teens. Visited a community garden. Spoke with people, not suspects.

Then came the alert.

A Threat Escalation. A broken window. A desperate man.

TrustNet calculated an 82% aggression probability. The man’s past: no violence, recent divorce, emotional distress.

Something didn’t add up. Carter ignored the prompt — and listened instead.

The Breach

Back at the precinct, Carter’s report flagged an anomaly. The system had pre-tagged the suspect before the incident. Anticipatory threat detection had triggered drone readiness despite calming vitals.

“It overruled real-time data,” analyst Mariah Cho confirmed. “His heart rate was dropping. Cortisol levels normalizing. He was de-escalating.”

Carter remembered the man’s terrified words: “It said I was a threat.”

He realized then — Darnell hadn’t panicked because of what he’d done, but because he believed the system had already decided what he would do. No judge. Just code.

And he’d almost paid for it.

The Inquiry

The footage hit X within hours — grainy but explosive. Carter coaxing the man down. Aisha crying inside. The story took off.

@JusticeQueenCLT: “CMPD letting AI label Black men ‘aggressive’ again.”

@CopWatchCarolina: “TrustNet = Digital Stop-and-Frisk.”

#TrustNetFail #WeAreNotDataPoints

CMPD moved fast. A Sentinel Review launched: internal affairs, NeuraSpan’s ethics team, and the Civilian Oversight Collaborative. Logs were pulled. Algorithms dissected. Bias protocols activated.

None of it mattered. The headlines wrote themselves: “AI Nearly Executes Innocent Man in Charlotte.” “Charlotte Cop Defies Algorithm — and Saves a Life.”

Carter was placed on reflective status. Drones were grounded. NeuraSpan dispatched engineers. But the real damage wasn’t procedural.

It was perception. Again.

The Debate

Trust metrics dipped. For the first time in a decade, CMPD’s emotional barometer flashed orange: PUBLIC CONFIDENCE EROSION DETECTED.

A forum was called. Tensions ran high.

A civil rights attorney spoke first: “You replaced human bias with algorithmic bias. We asked for justice — not software patches.”

A grandmother followed: “CMPD used to scare us. Now it’s the machines.”

Then came Aisha.

“I was there,” she said. “Officer Carter didn’t yell. He cared. My robot teacher says TrustNet learns. Maybe we can help it learn better.”

Silence. Then clapping.

It wasn’t a full reconciliation. But it was something.

The Reckoning

CMPD paused predictive tagging citywide. TrustNet’s core was investigated. The culprit: a corrupted 2028 dataset from a defunct jurisdiction. It had quietly encoded proxy bias — flagging Black men from low-income ZIP codes as high-risk.

It hadn’t been labeled “race.” It hadn’t needed to be.

CMPD didn’t deny it. They owned it.

A public press conference followed. Chief Jennings faced the cameras: “We trusted a machine to be better than our history. But machines learn from our history. And ours is uneven, incomplete, and unjust. That ends now.”

TrustNet’s codebase was opened. Residents could now view, challenge, and delete their profiles. CMPD funded a civilian algorithmic ethics board with real oversight.

Training changed. Officers studied data ethics. Bias detection. The responsibility of interpretation.

And Carter? He gave a talk at the University of North Carolina titled “When the Algorithm Almost Pulled the Trigger.”

He blamed not tech — but our outsourcing of trust. “When we trust predictions more than people, we lose the chance to be surprised by grace.”

Aftermath

Two months later, Carter returned to the store. The window was replaced. The mural painted. Inside, Aisha sat cross-legged, assembling a robot from old drone parts.

“Officer Carter!” she grinned. “It talks now. Want to hear?”

He knelt. “Always.”

Behind the counter, Darnell waved. He was in restorative justice counseling now. Healing had begun.

“Community repair models tonight,” he said. “You coming?”

“I’ll be there,” Carter replied.

TrustNet had changed too. New protocols. Contextual overrides. Multi-source checks before escalation. Internally it was Version 4.31c.

But to CMPD?

It was The Aisha Protocol.

Every high-risk flag now triggered a soft prompt: “Aisha Protocol Active: Are you seeing what the system can’t?”

It saved lives — not through prediction, but through pause. Through perspective.

As Carter turned to leave, Aisha’s robot followed him.

“Be kind, honey,” it chirped in her grandmother’s voice.

Carter smiled. “Looks like we’ve got backup now.”

Epilogue

Charlotte didn’t become perfect. No city is. But it became something rare — a city that admitted when the machine got it wrong.

And in the TrustNet archive, one new file was added:

Case: The TrustNet Incident

Status: Resolved

Lessons:

– Predictive policing without oversight is a threat, not a tool.

– Transparency isn’t a feature; it’s a foundation.

– Empathy must outpace efficiency.

– AI must be taught — not just to process, but to understand.

– Trust, once broken, must be rebuilt by both humans and machines.

Author’s Note

This story began with a simple but unsettling question: What happens when the systems we build to protect us begin to make decisions we don’t fully understand? In policing — and in society more broadly — there is growing pressure to solve complex problems with technology. Predictive algorithms, AI-powered threat assessments, and real-time sentiment analysis sound efficient. But efficiency isn’t the same as justice. And when these systems inherit our historical biases, they risk reinforcing the very injustices we claim to be fixing.

The TrustNet Incident is a fictional exploration of a future that feels increasingly close. It reflects both the promise and peril of using artificial intelligence in policing. Technology can help build trust — but only if it’s transparent, accountable, and always subordinate to human judgment, compassion, and community values. The real test is not whether AI can make policing faster or more precise — it’s whether it can make it better.

At its heart, this story is about redemption — of officers, institutions, and even the machines we build. It’s also about listening: to small voices, overlooked perspectives, and uncomfortable truths. If we are to move toward a just future, we must remain willing to question our tools, revise our assumptions, and never outsource the moral responsibility of decision-making.

Reflective Discussion Questions:

What role should predictive AI systems play in policing—and where should the line be drawn between helpful insight and harmful assumption?

How do we ensure that such systems enhance officer judgment rather than replace it?The TrustNet system was designed to reduce bias, but it absorbed flawed historical data. What safeguards should be in place to prevent AI from amplifying past injustices?

Who should be responsible for auditing these systems, and how often should they be reviewed?In moments of crisis, like the encounter at the convenience store, what does this story suggest is most important: training, technology, or human empathy?

Can technology ever truly replicate or support empathy—or does it inherently struggle to do so?The “Aisha Protocol” became a turning point in the story. How can community members—especially young people—play a role in shaping how police technology is designed, evaluated, and used?

What mechanisms exist (or should exist) for community input and oversight in AI-powered policing systems?This story portrays a police department that chose transparency and accountability over denial. In your opinion, what would meaningful accountability look like if a real-world AI policing tool failed in a similar way?

How can law enforcement rebuild trust after technology-driven mistakes, and is public forgiveness possible in those scenarios?

To download a printer-friendly version of the story, click here.

To read Sandy Vastola’s bio, click here.